WRS Challenge

The notion of telepresence is not science fiction. In the 1980’s the use of remote-control arms at nuclear facilities was already a common practice known as teleoperation. This type of operation was later enhanced by allowing geographically-remote control of such manipulators. In either application, the system consisted of an input unit for the operator to control, and an output device that executed the commands of the input unit. Telepresence, as a whole, involves the latter concept and refers to the use of a set of technologies which allow a person to feel as they were present, and to give the appearance of being present in a place other than their true location. But, the notion of “being able to feel present” is highly involved, requiring the perception of touch, sight and auditory signal as if the individual was actually present in the remote environment. This describes the true vision of telepresence. But, this vision, is unlike what the public has come to perceive as telepresence – the mere use of video streaming technologies in conjunction with a mobile base robot. We employ immersive telepresence technologies and endow our robot with semiautonomy, in an effort to achieve high-fidelity control. Immersive telepresence technologies are subject to bandwidth constraints that lead to latency, degradation of service and can impose a high cognitive load to the operator. A mixture between semi- or assisted-autonomy and telepresence can (1) help reduce such cognitive load and (2) help address the issues that may arise due to degradation of service – mainly that of task execution. Below we present some details of our humanoid robot named TeleBot and describe version 1 (TeleBot-1) and version 2 (TeleBot-2), which we aim to deploy at the World Robot Summit.

TeleBot-2

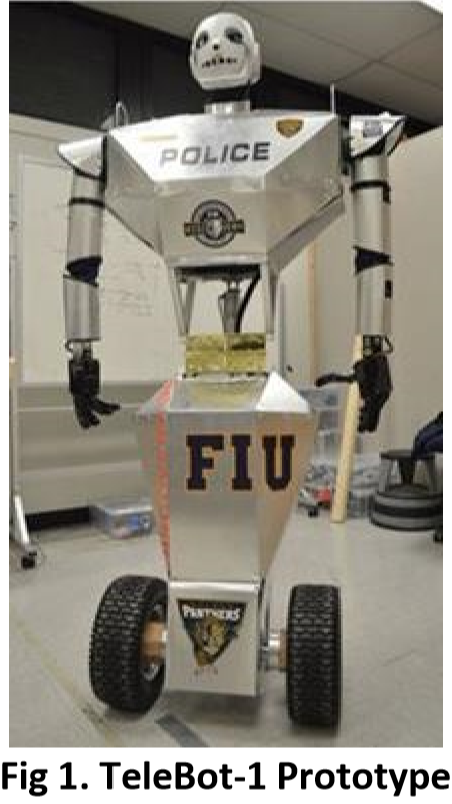

In the Fall of 2012, Dr. Jong-Hoon Kim and his group of researchers embarked on a quest to develop the world’s first truly immersive anthropomorphic telepresence robot – Telebot. Six years later, Dr. Jong-Hoon Kim is joined once again by his former leading researcher Irvin Steve Cardenas and an elite list of new researchers Do Yeon Kim, Nathan Kanyok, and others at Kent State University, Ohio USA, to develop the second generation of Telebot, called TeleBot-2: a truly immersive telepresence robot. The TeleBot-1 was a 6-foot, custom-built, humanoid telepresence robot whose overarching success garnered the attention of 500+ media outlets worldwide including Discovery Channel, Fox News, Univision Network, CBS12, Yahoo News, and Mashables. Its mechanical design combined a humanoid upper body with a wheel-based locomotion system.

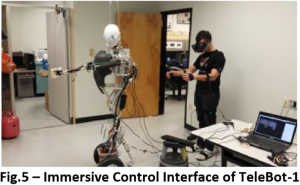

The upper body consisted of two mechanical arms with 6 degrees-of-freedom, two fully actuated robotic hands and a robotic humanoid head with 2 degrees-of-freedom which incorporated a set of cameras for stereoscopic vision. Pressure and temperature sensors were embedded on the robotic hands to allow operators to sense objects grasped by the telepresence robot. The locomotion system implemented a two-wheeled inverted pendulum balancing model that allowed the robot to seamlessly travel across ground terrain without the computational complexity of walking. The operator system encompassed different interfaces to control TeleBot. The most widely used interface was our motion capture suit which captured motion data from the operator and relayed the information to the robot for replication. The suit also incorporated haptic technologies that allowed the user to feel tactile features of the remote environment. Other interfaces adapted EMG technologies to allow the user to control certain parts of the robot via muscle movements. Actuation of the robotic hand took place via the use of sensory gloves that sensed abduction and adduction of the operator’s hands.

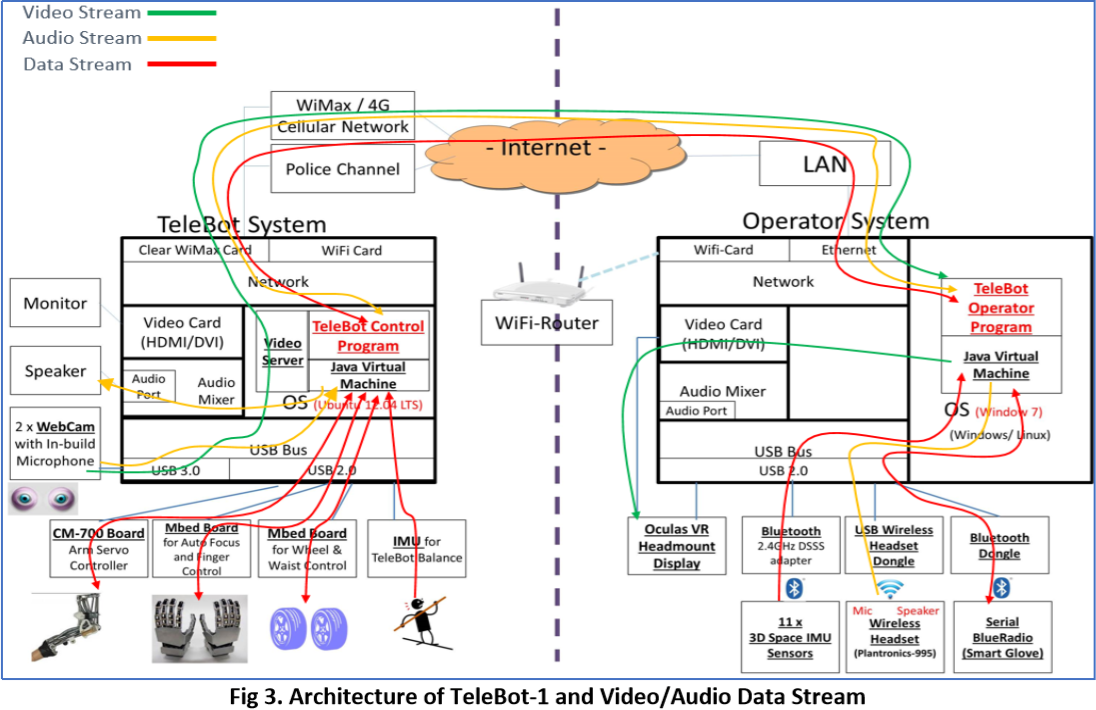

The software architecture of Telebot-1 evolved from a centralized model to a distributed model and was developed specifically for TeleBot. Initially the telepresence system was implemented as client-server model where the operator acted as a client that connected to the robot acting as the server. Furthermore, all subparts of the operator system were connected synchronously leading to unrecoverable failures to take place and forcing the system to restart if failures occurred.

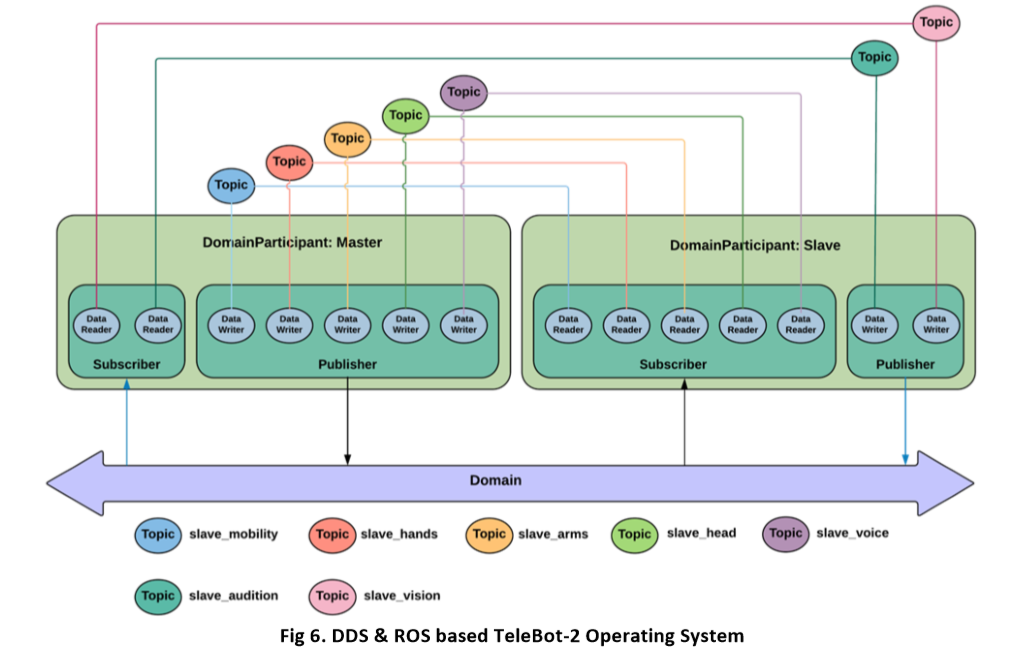

A later version of TeleBot-1 implemented a distributed architecture, leveraging the open-source Data Distribution Service (DDS) middleware which is often used for real-time systems. The use of DDS allowed for each component within the telepresence system to run independently and interoperate with other components via publish and subscribe communication. Therefore, this increased fault tolerance and allowed for seamless failovers to take place within the operator system. It allowed different interfaces to take control of any component of the robot as long as the interface addressed and mapped to the component via the appropriate communication “topic”.

Telebot-2 builds on our previous work to bring forth a more dynamic, immersive and real-time telepresence system. Telebot-2 is a hybrid bipedal robot that incorporates a dynamic wheel-based system. The robot can switch between bipedal for walking and the wheel-based system for locomotion. The key advances of this robot include (1) its hybrid locomotion mechanism, (2) semi-autonomy enabled by adaptive learning, (3) immersive teleoperation, (4) sophisticated planning algorithms for manipulation and navigation and (5) a proactive and robust TeleBot management system. The number of legs determines the way of locomotion and hence the control of complexity. Compared to other natural counterparts, animals like insects or spiders can walk upon birth whereas humans require several months to crawl on arms and legs and more than a year to stand and walk upright. This indicative of the complexity of walking upright in two legs and of the stability and control action required for crawling on four legs. Given the complexities of developing robust bipedal robots that are able to withstand all walking terrains, TeleBot-2 incorporates a dynamic wheel-system that allows our humanoid to switch between a dynamic bipedal walking gait to a motorized wheel-based locomotion. Such systems allow the humanoid to choose the most effective and efficient means of locomotion according to the environmental constraints.

Unlike current teleoperated robots, we introduce the concepts of adaptive learning and inverse reinforcement learning to allow the teleoperated robot to carry out task semi-autonomously in environmental constrains such as telecommunication bandwidth, GPS, etc. We generate a training set with features that include the operator’s motions, task and context among other features and develop a model that allows the robot to predict the appropriate motions and tasks. We employ this technique to allow the humanoid to also yield corrective movements in case of noise or other environmental constraints. Furthermore, we focus on immersive telepresence – allowing the operator of the humanoid to feel the presence in the remote environment.

We employ somatosensory hijacking techniques that employ a mix between human action and environmental expectation along with synthetic sensory feedback through electro-stimulation, haptics among other techniques Telebot-2 currently employs custom software that leverage DDS as a middleware, but our current efforts also include the introduction of the Robot Operating System (ROS) architecture and the development of ROS modules.

Teams:

Director

Control Management | KSU ATR Lab

Dr. Jong-Hoon Kim

Team Members:

ATR Team Leader:

Cardenas, Irvin Steve

Teleoperation:

Kim, Do Yeon

Robot Motion:

Lin, Xiangxu

CAD & Manufacturing:

Arch, Hadley

Human Robot Interaction:

Kanyok, Nathan

Augmented Reality:

Kim, SungKwan

Image Recognition:

Riedlinger, Andrew

Vision System:

Shaker, Alfred

CAD & ROS:

Baza, Ahmed

Power & Circuit:

Jung, HyunJae

Localization:

Richardson, Kody

Public Relations:

Levina, Anna

Suit Designer:

Vitullo, Kelsey

Shell Design:

Kim, Gaeun

Bio-Information:

Lee, Suho

Director

Mission Management | SCALE Team

Dr. Gokarna Sharma

Team Members:

Path Planning Optimization:

Poudel, Pavan

Director

System Management | FIU Discovery

S.S. Iyengar

Team Members:

Data Management Telecommunication:

Prabakar, Nagarazan

Viswanathan, Subramanian